LangGraph Memory Example

LangGraph is a library created by LangChain for building stateful, multi-agent applications. This example demonstrates using Zep for LangGraph agent memory.

A complete Notebook example of using Zep for LangGraph Memory may be found in the Zep Python SDK Repository.

The following example demonstrates building an agent using LangGraph. Zep is used to personalize agent responses based on information learned from prior conversations.

The agent implements:

- persistance of new chat turns to Zep and recall of relevant Facts using the most recent messages.

- an in-memory MemorySaver to maintain agent state. We use this to add recent chat history to the agent prompt. As an alternative, you could use Zep for this.

You should consider truncating MemorySaver’s chat history as by default LangGraph state grows unbounded. We’ve included this in our example below. See the LangGraph documentation for insight.

Install dependencies

Configure Zep

Ensure that you’ve configured the following API keys in your environment. We’re using Zep’s Async client here, but we could also use the non-async equivalent.

Define State and Setup Tools

First, define the state structure for our LangGraph agent:

Using Zep’s Search as a Tool

These are examples of simple Tools that search Zep for facts (from edges) or nodes. Since LangGraph tools don’t automatically receive the full graph state, we create a function that returns configured tools for a specific user:

Chatbot Function Explanation

The chatbot uses Zep to provide context-aware responses. Here’s how it works:

-

Context Retrieval: It retrieves relevant facts for the user’s current conversation (thread). Zep uses the most recent messages to determine what facts to retrieve.

-

System Message: It constructs a system message incorporating the facts retrieved in 1., setting the context for the AI’s response.

-

Message Persistence: After generating a response, it asynchronously adds the user and assistant messages to Zep. New Facts are created and existing Facts updated using this new information.

-

Messages in State: We use LangGraph state to store the most recent messages and add these to the Agent prompt. We limit the message list to the most recent 3 messages for demonstration purposes.

We could also use Zep to recall the chat history, rather than LangGraph’s MemorySaver.

See thread.get_user_context in the Zep SDK documentation.

Setting up the Agent

This function creates a complete LangGraph agent configured for a specific user. This approach allows us to properly configure the tools with the user context:

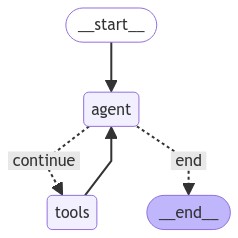

Our LangGraph agent graph is illustrated below.

Running the Agent

We generate a unique user name and thread id, add these to Zep, and create our configured agent:

Let’s test the agent with a few messages:

Hello! How are you feeling today? I’m here to listen and support you.

I’m sorry to hear that you’ve been feeling stressed. Work can be a significant source of pressure, and it sounds like your dog might be adding to that stress as well. If you feel comfortable sharing, what specifically has been causing you stress at work and with your dog? I’m here to help you through it.

Viewing The Context Value

The context value will look something like this:

That sounds really frustrating, especially when you care so much about your belongings and your dog’s health. It’s tough when pets get into things they shouldn’t, and it can add to your stress. How are you feeling about that situation? Are you able to focus on her health despite the shoe incident?

Let’s now test whether the Agent is correctly grounded with facts from the prior conversation.

We were discussing your concerns about your dog being sick and the situation with her eating your expensive shoes. It sounds like you’re dealing with a lot right now, and I want to make sure we’re addressing what’s on your mind. If there’s something else you’d like to talk about or if you want to share more about your dog, I’m here to listen.

Let’s go even further back to determine whether context is kept by referencing a user message that is not currently in the Agent State. Zep will retrieve Facts related to the user’s job.

You’ve mentioned that you’ve been feeling a bit stressed lately, primarily due to work-related issues. If you’d like to share more about what’s been going on at work or how it’s affecting you, I’m here to listen and support you.